VR Video Pitfalls and Solutions

It is difficult to make a good VR video without access to a VR headset, because there are many potential problems that are not immediately apparent from just looking at the warped side-by-side or over/under video in a non-VR player on a regular screen. And even with a headset, some problems can be very subtle or might only bother a subset of viewers. This article will deal with both the most common and least-discussed problems that can arise when creating VR video.

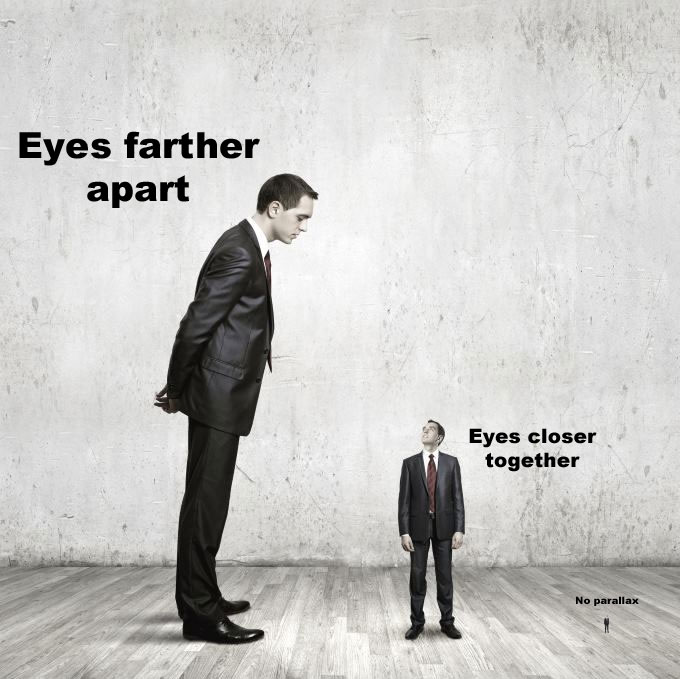

Scale

Perceived scale is controlled by the distance between cameras. 6.4 cm. is the average adult interpupillary distance (IPD) for real-world scale. If you're not using a program with real-world units or if your models are of unknown scale, try matching the distance between cameras to the distance between the eyes of your characters. This only works for realistically proportioned characters. But the best thing to do is make test renders and view them in an HMD.

To correct scale problems:

- If everything around you looks too small, shrink your perspective by decreasing the distance between the cameras

- if everything around you looks too big, grow your perspective by increasing the distance between the cameras.

Think about it in percentages. If everything is 30% too big, increase the distance between the cameras by 30%. It's a 1:1 inverse relationship between the intercamera distance (ICD) and how big things feel.

Parallel vs Converging Cameras

Toe-in (converging) cameras cause perceived scale to change with distance to camera more noticeably than parallel cameras.

Both configurations have this issue, but toe-in exaggerates it. Using toe-in cameras does cause some stereo to be captured on the sides of the image, but the value of this is completely overcome by the unreality of shifting perceptual scale. VR video should ALWAYS use a parallel (or near-parallel) camera setup. Toe-in camera setups are a relic of 3D video, from a time when there was a lot of effort spent on trying to use camera intersection planes as another means of focusing attention. The perceptual scale issue didn't matter because 3D video doesn't have a fixed viewing distance, which already causes shifting perceptual scale. 3D video isn't shot with cameras separated by realistic IPD distances; the perceptual scale is chosen for each shot with aesthetic intent. None of those techniques have any value to VR video.

Gaze-contingent Stereo Rendering could solve this issue completely, but this will more likely be used in realtime VR with eye tracking.

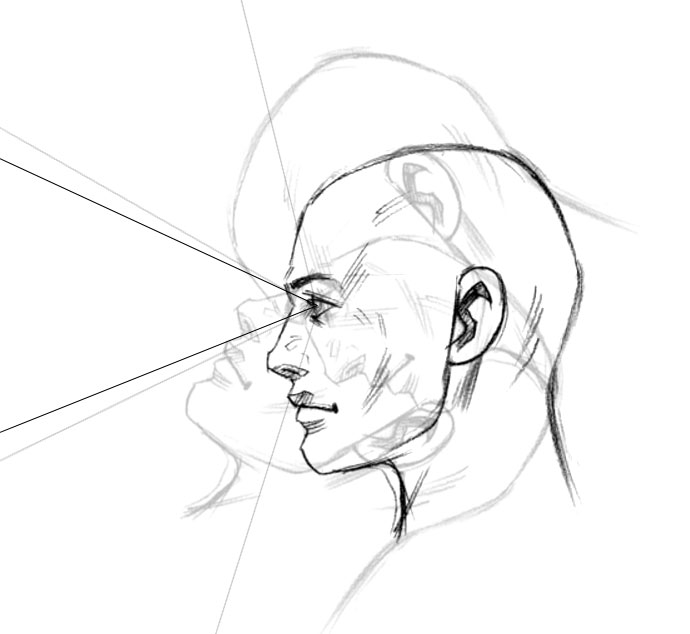

Eye Pivot

When placing a camera for an embodied scene, it would seem to make sense to place the camera as close as possible to the avatar's eyes. But this position is not only used for looking forward, but looking up and down as well. From the viewer's perspective, they are pivoting at this place in space, as though their whole head was pinned in space by their eyes. When you look down from this perspective, your body will appear too far away, and the base of your neck will be slightly too far in front of you. The compromise that has been found to work best is placing the camera right in front of the avatar's nose. This gives some forward distance, so the avatar's chest is farther back like it would be when you tilt your head down, causing your eyes to travel forward. It also moves the viewpoint slightly lower than the actual eye height would, which brings it closer to the neck and body. When looking forward or up, this slight inaccuracy isn't noticeable because it is so small and there is no nearby point of reference that would reveal the discrepancy.

Rolled Horizon

VR video players orient video according to gravity by default, with only a tilt option (more accurately labeled pitch) to angle the video up and down. If the video was captured with a rolled horizon, viewers will naturally try to roll their head to match the horizon. The image only contains horizontal stereo dispairty, so rotating your head will cause the image to break up vertically, as one eye is now physically lower than the other in space. Your brain can't fuse the image after only a few degrees of roll.

Avoid any camera roll, except for videos created to only be viewed at an angle, such as lying on your side. For these videos, capture with the camera rolled, but then counter-roll each eye back to a flat horizon, and finally add a zero-parallax guide image at the beginning of the video with a 2D indicator showing how users should tilt their head to view.

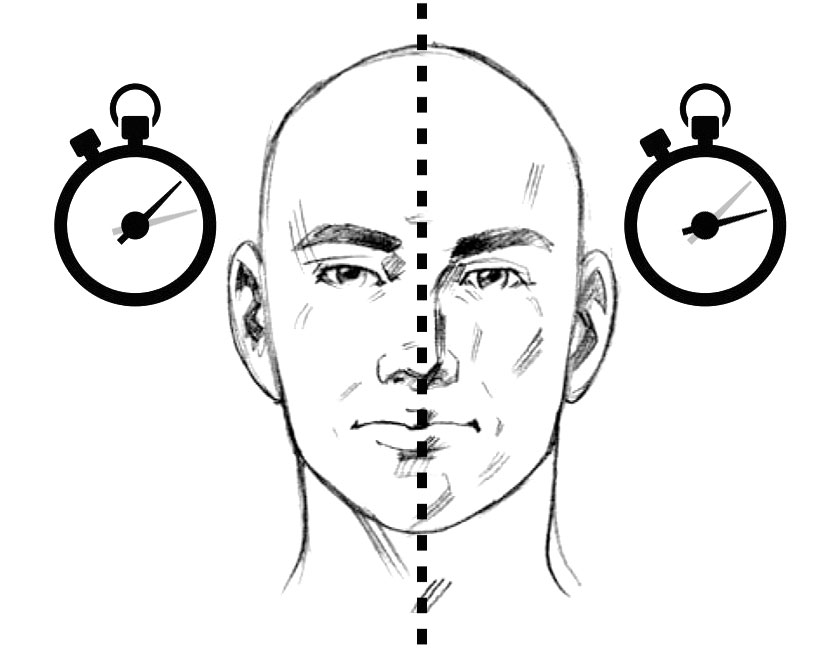

Left/Right Desync

VR video captured with unsynchronized cameras may exhibit desyncronization. This is where one eye is running ahead or behind the other eye. Use synchronized cameras or specialized retiming software to correct this. A clack board at the beginning and ending of a shot can help.

Computer generated VR video may exhibit a different kind of desyncronization. If the VR video involves a non-deterministic physics simulation, and the left and right eyes are not rendered simultaneously, the results of the physics simulation may be different between each pass. This will create a headache-inducing effect, because the positions of simulated elements will not match up. Use a deterministic physics simulation, a pre-computed physics simulation, or render both eyes at the same time to avoid this.

Stereo Disparity Deadzones

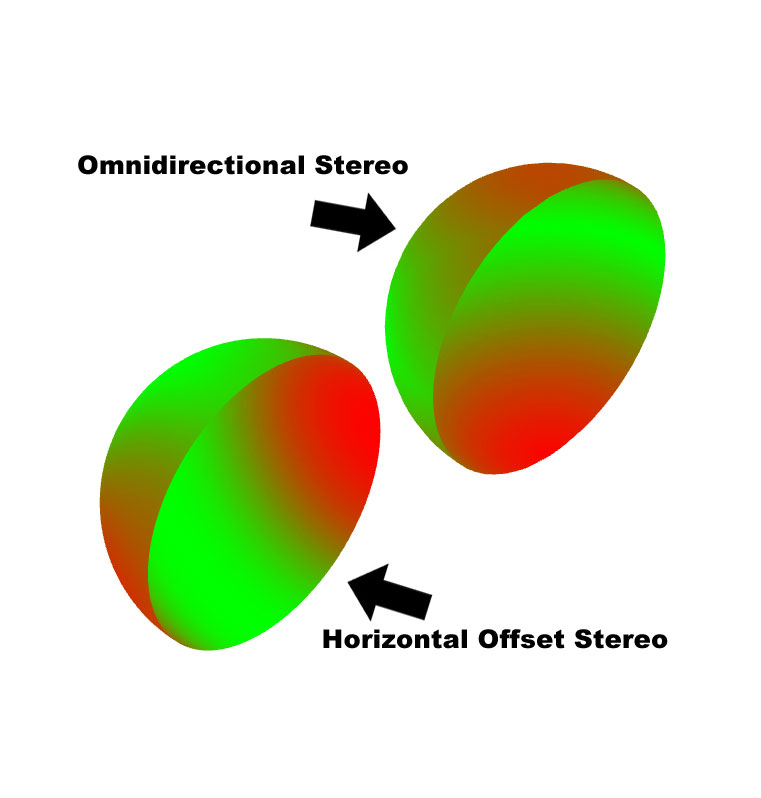

Two cameras side-by-side capturing what a left and right eye would see is called Horizontal Offset Stereo (HOS). Real and simulated 180 stereo fisheye cameras lose stereo to the left and right. This is due to the left/right offset falling to zero at steep horizontal angles.

Also note that real stereo fisheye cameras can see each other, and the visible lens area must be removed and the empty patch filled in with image data from the other camera lens. This can help to hide the small amount of in/out parallax resulting from the left/right offset becoming an in/out offset at the steep angle. It can also result in some image breakup if very near objects pass in and out of the patched area.

360 Omni-directional Stereo (ODS) is a stereo approximation method achieved by means of slit-scan cameras or manipulated scene geometry. ODS imagery (rendered or captured and stitched) loses stereo above and below. If you want VR video with a body, you need stereo below you, where your body is. Up to this point, embodied VR video has had most success with HOS. In a later article I will present an alternate method for rendered 180 VR video that merges these two approaches.